Artificial intelligence (AI) is rapidly transforming the modern workplace — from recruiting and AI hiring tools that screen resumes in microseconds, to algorithm-driven performance reviews and employee monitoring systems. But with this AI landscape comes growing concerns about fairness, transparency and individual privacy.

More job seekers and employees are finding their futures shaped by algorithms, sparking tough questions. Can these systems be trusted to avoid bias? Do people understand how they really work? And when something goes wrong, who takes responsibility? These concerns are exactly why new state AI employment laws — and the federal AI in employment law action plan — are becoming so important.

Why States Are Stepping In

At the federal level, the White House’s AI Action Plan outlines over 90 policy goals, signaling increasing engagement with AI governance — but for now, most of its focus is big-picture strategy rather than tailored workplace regulation. That means states are taking the lead. Public concern, advocacy efforts and court challenges are pushing states to develop AI hiring laws and algorithmic discrimination law frameworks to ensure workplace tools don’t perpetuate unfair bias or violate privacy.

For employers, the rise of state AI employment laws is more than a legal development — it’s a shift in workplace responsibility.

State AI Employment Laws Employers Should Know

California

- On October 1, 2025, new Fair Employment and Housing Act (FEHA) regulations take effect, prohibiting employers from using automated-decision systems (ADS) or hiring tools that discriminate based on protected categories like national origin, sex, disability or age

- Employers must retain records related to automated decision data for at least four years

- The state Attorney General has also cautioned industries, like health care, on responsible AI deployment, stressing compliance with civil rights, data privacy and fair competition laws.

Colorado

- SB 205, effective May 17, 2024, extends into law a pioneering algorithmic discrimination law, imposing duties of “reasonable care” on both developers and deployers of high-risk AI systems (such as those used in hiring or employment decisions)

- From February 1, 2026, parties must conduct impact assessments, manage risks, offer human review and disclose to the AG if algorithmic discrimination is detected — creating a strong transparency and accountability framework

- Colorado’s bill is currently being reevaluated in a special session amid political debate

Illinois

- Amendments to the Illinois Human Rights Act, effective January 1, 2026, now explicitly ban AI use that results in discrimination against protected classes

- The new law also blocks the use of ZIP codes as stand-ins for protected traits, closing a loophole that can lead to hidden bias

- Employers are required to provide notice when AI is used in employment decisions, including the criteria assessed

New York City (Local Law)

- In effect since July 5, 2023, NYC’s Local Law 144 requires employers in New York City to: 1) disclose when AI is involved in hiring decisions, 2) explain which traits the tool evaluates and 3) give applicants an option to flag possible bias or seek an alternative review

Texas

- Recently, Texas signed a law to regulate AI use, but experts view it as more limited in scope compared to other states' AI hiring laws

Activity in Other States

- Massachusetts, New Jersey, Oregon and other states have begun issuing guidance or enforcement relating to AI under existing laws like consumer protection, privacy and anti-discrimination, especially in the absence of comprehensive AI-specific statutes

How New Legislation Impacts Employers

Transparency is no longer optional; job applicants and employees have a right to know when AI is influencing decisions about hiring, promotion or performance. This means companies must be prepared to explain how these systems work and their effect on outcomes.

Compliance extends beyond the workplace, as well. Many of the new laws hold employers accountable for the AI vendors they partner with, making it essential to scrutinize external tools for bias and privacy safeguards. Recordkeeping is another critical factor. Laws in states like California and Colorado require employers to maintain detailed documentation, including impact assessments and decision audits, to prove that AI tools are being used fairly.

At the core, these changes demand a cultural shift toward accountability. Employers need to treat AI not as a “behind the scenes” tool but as a regulated process — one that requires oversight, human judgment and clear documentation at every step. Those who adapt early will find it easier to demonstrate compliance and build trust with employees in this evolving legal landscape.

Smart Practices for Employers Using AI

To effectively embrace AI while avoiding legal pitfalls, employers should:

- Conduct regular audits of AI tools to detect bias and ensure fairness

- Stay informed of laws state by state, since state AI employment laws vary significantly

- Communicate openly with employees on how AI is used, and gather informed consent when sensitive personal data is involved

- Involve HR and legal teams early in AI tool adoption to monitor compliance and policy alignment

Looking Ahead and Staying Compliant

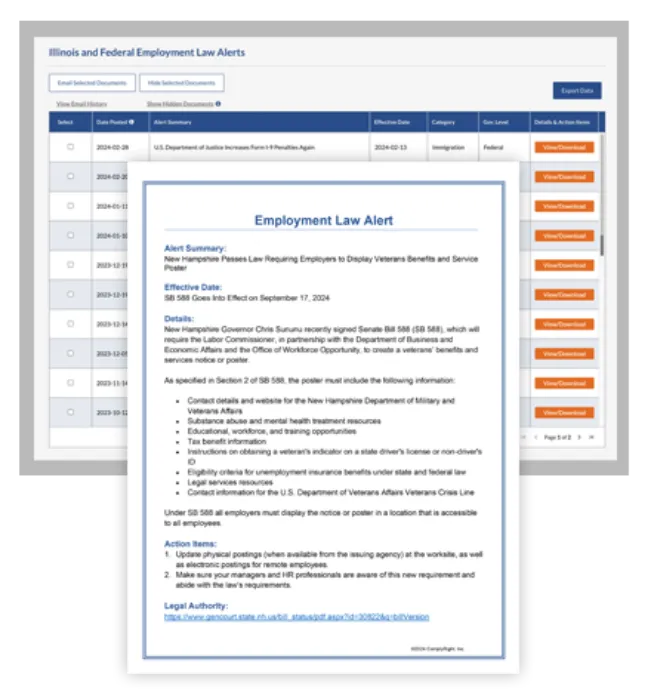

The momentum around AI in employment law is just getting started. Expect more state-level AI hiring laws, stricter oversight and a future where federal and state rules may align. Staying agile and informed is key — and the Employment Law Alert Service makes it easier. Subscribers get timely alerts and clear summaries of new and updated laws, so you can focus on running your business while keeping your AI hiring practices compliant and transparent.